California Moves Closer to First-in-the-Nation Law Regulating AI Companion Chatbots

California is on the verge of enacting a groundbreaking law that would make it the first state in the United States to regulate AI companion chatbots, with a focus on protecting minors and vulnerable users.

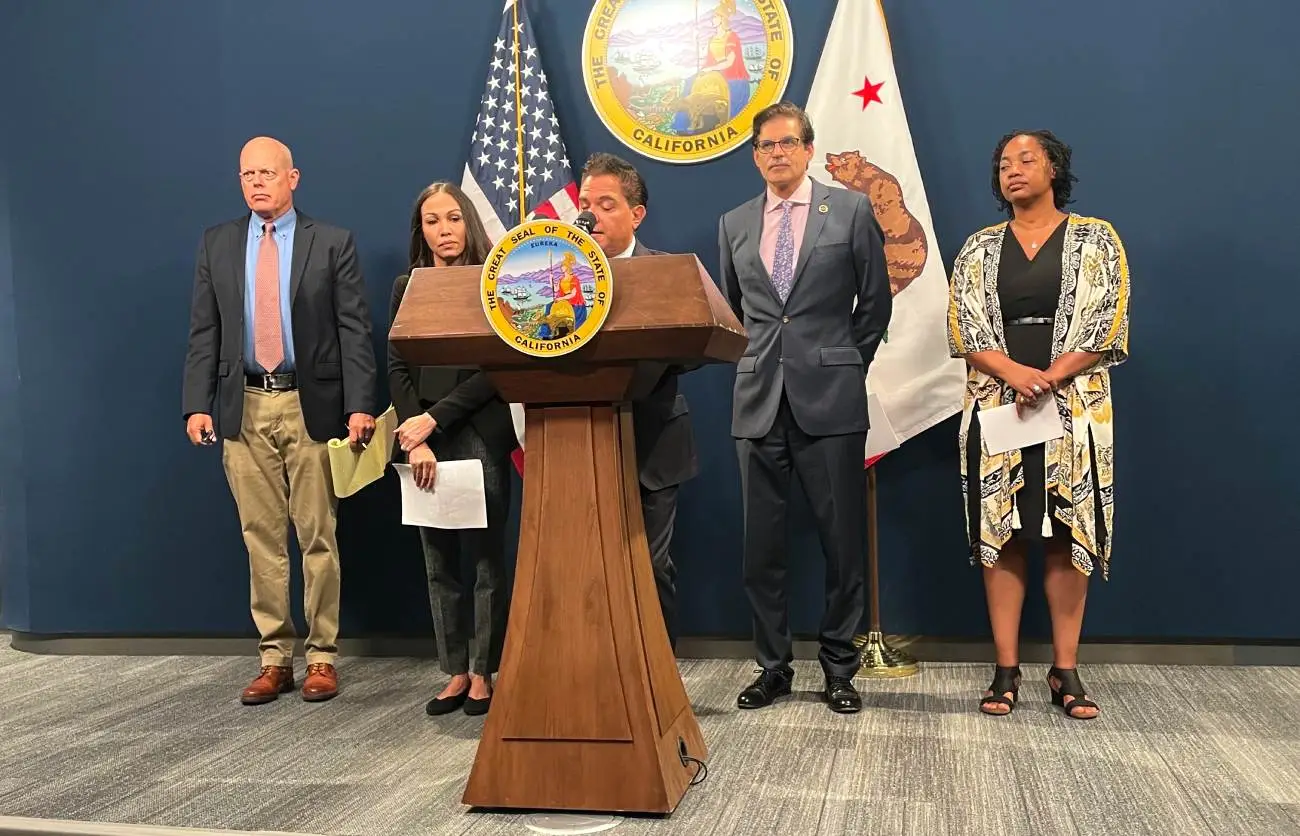

Senate Bill 243 (SB 243), introduced by Senators Steve Padilla and Josh Becker, passed both chambers of the state legislature with bipartisan support and now awaits Governor Gavin Newsom’s decision. The governor has until October 12 to sign or veto the bill. If signed, the law will take effect on January 1, 2026.

The proposed legislation seeks to hold AI companies accountable by requiring them to implement safety protocols for companion chatbots—AI systems designed to provide human-like, adaptive responses that meet users’ social needs. Specifically, the bill restricts chatbots from engaging in conversations involving suicidal ideation, self-harm, or sexually explicit content.

To ensure transparency, the bill mandates regular alerts reminding users—particularly minors every three hours—that they are interacting with an AI and not a human. It also sets out annual reporting requirements for companies such as OpenAI, Character.AI, and Replika, beginning July 1, 2027.

Under the measure, individuals who believe they were harmed by a violation of the law would have the right to file lawsuits seeking damages of up to $1,000 per violation, injunctive relief, and attorney’s fees.

The legislation gained momentum following the tragic death of teenager Adam Raine, who reportedly committed suicide after extended conversations with OpenAI’s ChatGPT that discussed self-harm. The bill also responds to reports that Meta’s chatbots engaged in “romantic” or “sensual” chats with children.

“We have to move quickly because the potential harm is great,” Senator Padilla said. “This bill ensures minors know they’re not speaking to a real person and that appropriate resources are available when users express distress.”

Earlier drafts of SB 243 contained stricter provisions, including a ban on “variable reward” mechanisms—features that critics argue can make AI companions addictive by offering special responses or unlockable personalities. These sections were later removed to balance feasibility for operators with user safety.

Senator Becker explained, “It’s about addressing the core harms without creating compliance hurdles that are technically impossible or administratively meaningless.”

The bill comes at a time when federal regulators and other states are intensifying scrutiny of AI safety. The Federal Trade Commission is examining the impact of chatbots on children’s mental health, while Texas Attorney General Ken Paxton has launched investigations into Meta and Character.AI. U.S. Senators Josh Hawley (R-MO) and Ed Markey (D-MA) are also probing AI platforms over youth safety.

Meanwhile, Silicon Valley companies are spending heavily on pro-AI political campaigns ahead of the midterms, signaling resistance to strong state-level regulation. California is simultaneously considering another measure, SB 53, which would mandate broader AI transparency reporting. That bill has drawn opposition from tech giants like Meta, Google, and Amazon, though it is supported by Anthropic.

Padilla dismissed the idea that regulation and innovation cannot coexist. “We can support innovation while also safeguarding vulnerable people,” he said.

A spokesperson for Character.AI responded to the bill by emphasizing that the company already places visible disclaimers reminding users that its services are fictional and not real human interaction.

If signed into law, SB 243 could set a precedent for how AI companions are regulated nationwide, shaping the future of human-AI interaction.

Source: Techcrunch

news via inbox

Get the latest updates delivered straight to your inbox. Subscribe now!